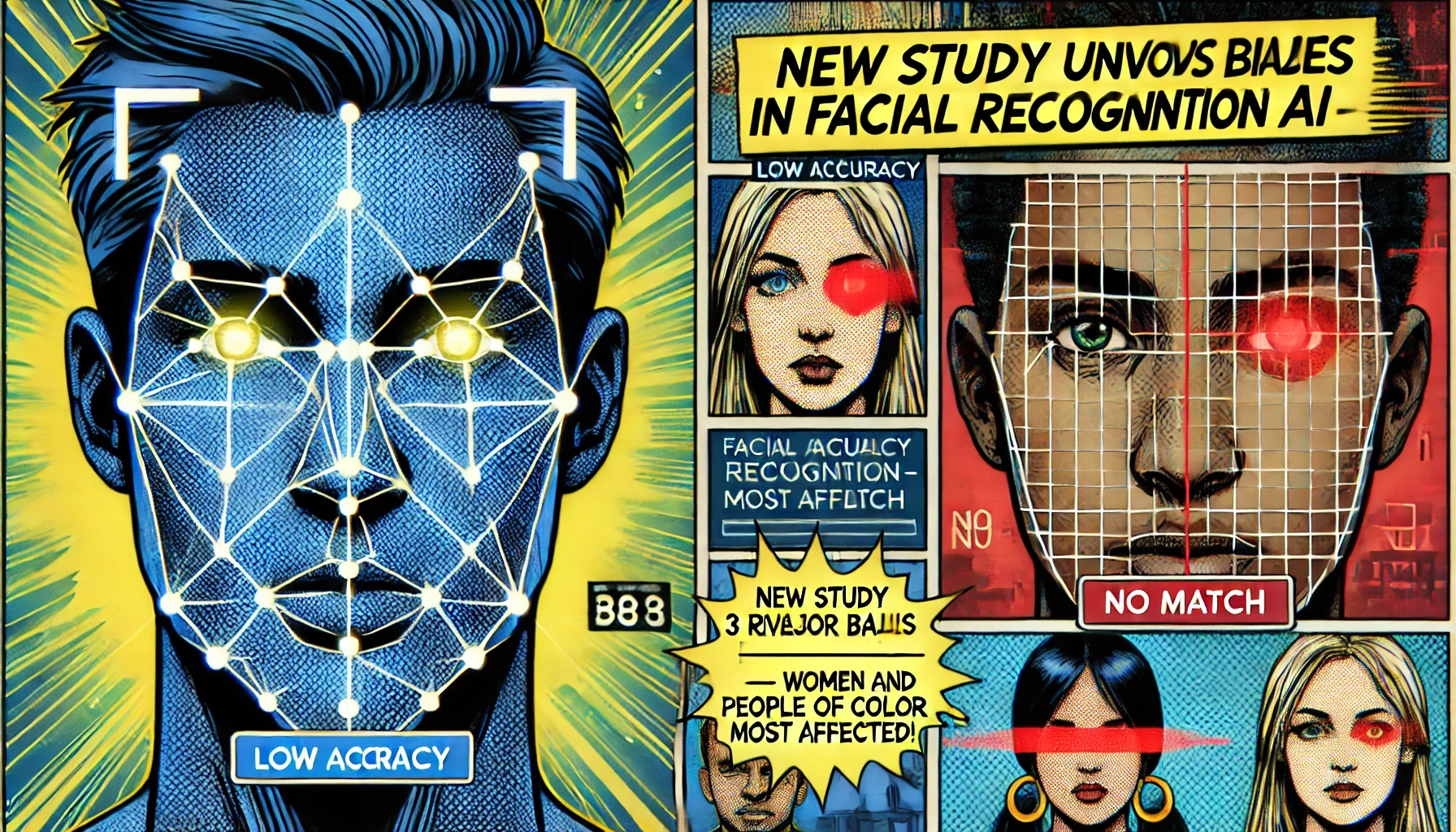

New Study Reveals Widespread Bias in Facial Recognition AI Systems

A recent, comprehensive study published by Tech Ethics Today has uncovered a disturbing level of bias embedded in several widely deployed facial recognition AI systems. Conducted by a collaborative international team of computer scientists, data ethicists, and civil rights researchers, the study found that these AI tools often misidentify individuals with darker skin tones and women at alarmingly high rates. Released in March 2025, the findings have since ignited fresh controversy around the ethical use of AI, especially in critical sectors like law enforcement, corporate hiring, and public surveillance.

According to the report, facial recognition systems created by some of the most influential technology companies in the world were tested and reviewed for racial and gender fairness.

The analysis highlighted a clear pattern: error rates were substantially higher for Black, Indigenous, and Asian individuals—especially women—compared to white male subjects. As more institutions turn to AI for decision-making, this research serves as a wake-up call to tech developers and policymakers alike, underlining the urgent need for ethical accountability and systemic reform.

Facial recognition technology has become deeply embedded in modern life, offering convenience and enhanced security through applications in smartphones, airports, government surveillance programs, and even workplace monitoring systems. Its rise has been fueled by rapid advances in machine learning and image processing. However, even as the technology becomes more powerful, ethical concerns—particularly regarding bias and fairness—have trailed closely behind.

The roots of these concerns can be traced back to a pivotal 2018 study by Joy Buolamwini at the MIT Media Lab, which first exposed how major commercial AI systems underperformed significantly when analyzing faces of women and people of color.

That research catalyzed widespread public discourse and led to temporary bans on the use of facial recognition in cities like San Francisco and Portland. Despite such actions, the use of AI-driven recognition tools has expanded globally, often without sufficient safeguards or audits. The new study from Tech Ethics Today builds upon prior work with a much broader scope, evaluating multiple datasets, models, and use cases in real-world environments.

Key Facts & Details

Key Findings of the Study:

- Higher Error Rates for Darker Skin Tones: Facial recognition AI misidentified Black women up to 34% more often than white men, who consistently had the highest accuracy rates across all tested systems. This disparity was consistent across all companies evaluated.

- Gender Disparities in Identification: The algorithms struggled more to correctly identify female faces than male faces. For women of color, the error rates increased significantly, compounding the issue and leading to potentially serious consequences in high-stakes environments like airports and job screenings.

- Geographic and Dataset Bias: The study revealed that the training datasets used by most companies heavily favored Western-centric images—meaning individuals from North America and Europe were overrepresented, while faces from Asia, Africa, and South America were underrepresented. This lack of diversity in training data directly impacted the model’s ability to generalize accurately.

What Experts Say: “When training data lacks diversity, the AI mirrors that bias in its predictions,” explained Dr. Lina Ramirez, co-author of the report and AI fairness researcher at the Global Institute for Ethical AI. “We are witnessing a technological mirror of societal inequalities, which is deeply problematic if these tools are used in public safety or employment.”

Statistics That Matter:

- Over 80% of the AI training datasets examined had fewer than 5% of images representing individuals with darker skin tones.

- Facial recognition software used by police departments showed a 25% higher false positive rate for Asian individuals compared to white individuals.

- Hiring platforms using facial analysis algorithms recommended white male candidates 40% more often than equally qualified female candidates of color.

- In airport screenings, false positive alerts for non-white passengers were nearly double those of white travelers.

Analysis & Impact

Impact on the Technology Industry:

- Erosion of Public Trust: Every time biased AI results in an unjust arrest or discriminatory job screening, public trust in technology takes another hit. This has the potential to hurt brand reputations and user engagement across platforms.

- Increased Regulatory Scrutiny: With mounting public pressure, legislative bodies in the U.S., EU, and Asia are proposing bills that would mandate regular bias audits, transparency in training data sources, and independent oversight boards.

- Corporate Reforms: Some major companies, in response to public outcry and legal pressure, have paused facial recognition development or pledged to improve dataset diversity, introduce fairness checks, and employ ethicists on AI teams.

Challenges & Risks:

- Lack of Global Standards: While there are ethical frameworks in development, there is still no globally enforced regulation ensuring fairness in AI, creating a fragmented and inconsistent landscape.

- Ethical Gray Areas: What constitutes “bias” or “fairness” can vary culturally and socially. Some developers argue it’s difficult to program fairness without societal consensus.

- Risk of Over-Reliance: As AI is increasingly trusted for critical tasks, over-reliance on flawed systems can have devastating outcomes—such as wrongful incarceration or systemic discrimination in hiring and financial lending.

- Cost Barriers to Fairness: Incorporating diverse datasets and performing regular bias audits can be costly, potentially discouraging smaller companies from prioritizing fairness.

Resources & References

- Tech Ethics Today – March 2025 AI Bias Study

- MIT Media Lab – Gender Shades Research, 2018

- Pew Research Center – Public Attitudes Toward Facial Recognition, 2024

- World Economic Forum – Guidelines on Ethical AI, 2023

- The New York Times – Reports on AI Misidentification in Law Enforcement, 2022

- AI Now Institute – Algorithmic Accountability Reports, 2021

- Wired Magazine – Feature on Bias in Hiring Algorithms, 2023

This new study offers compelling and urgent evidence that current facial recognition technologies, if left unchecked, can perpetuate and even exacerbate historical patterns of inequality and discrimination. As AI becomes more integrated into everyday decision-making processes, the stakes are too high to ignore these systemic issues.

Governments, developers, and everyday users must work together to demand more transparent, inclusive, and accountable AI practices. What are your thoughts on the findings? Have you encountered bias in AI-driven systems? Leave a comment below and don’t forget to subscribe to our newsletter for in-depth updates on AI ethics, fairness, and innovation. 🚀